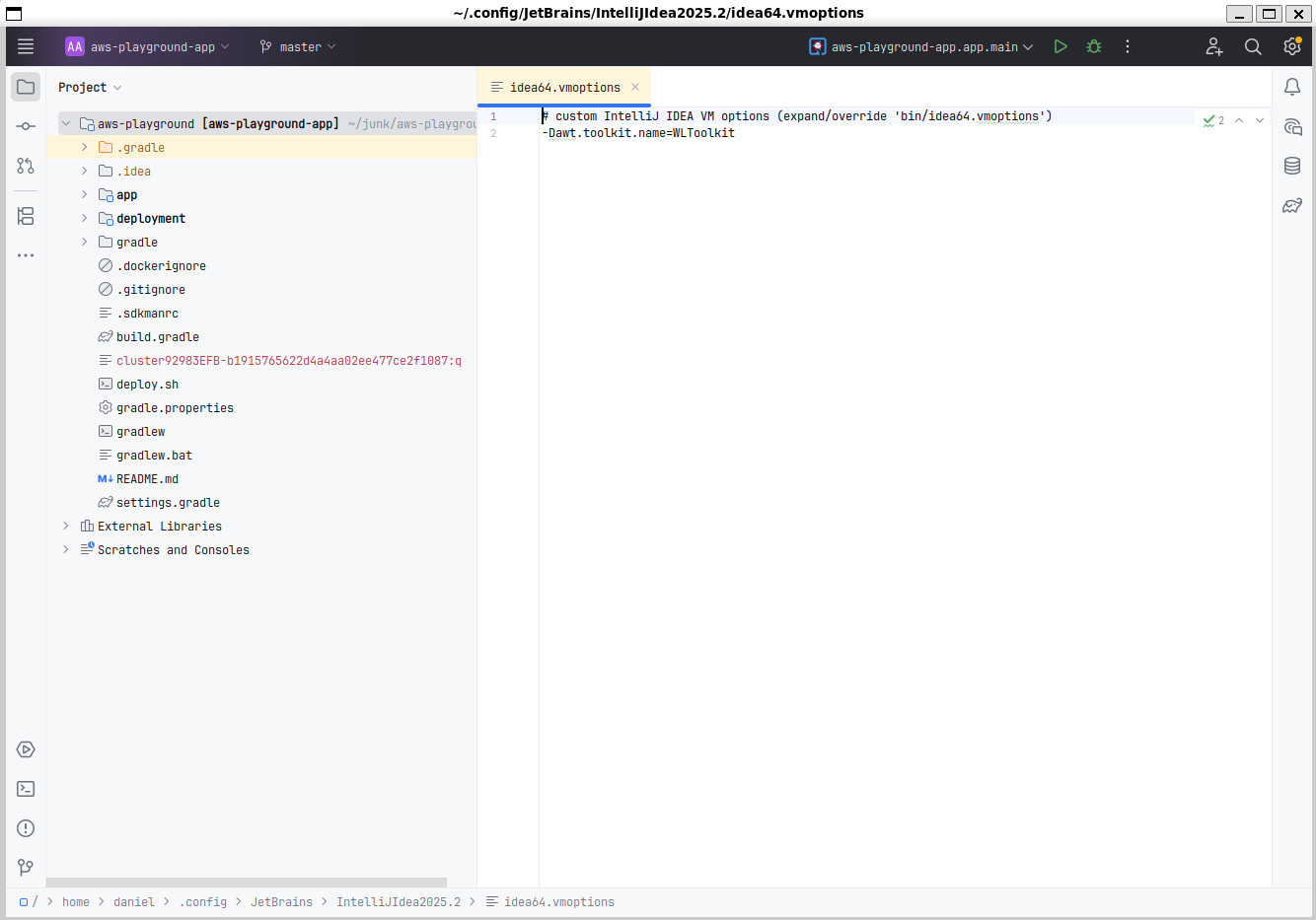

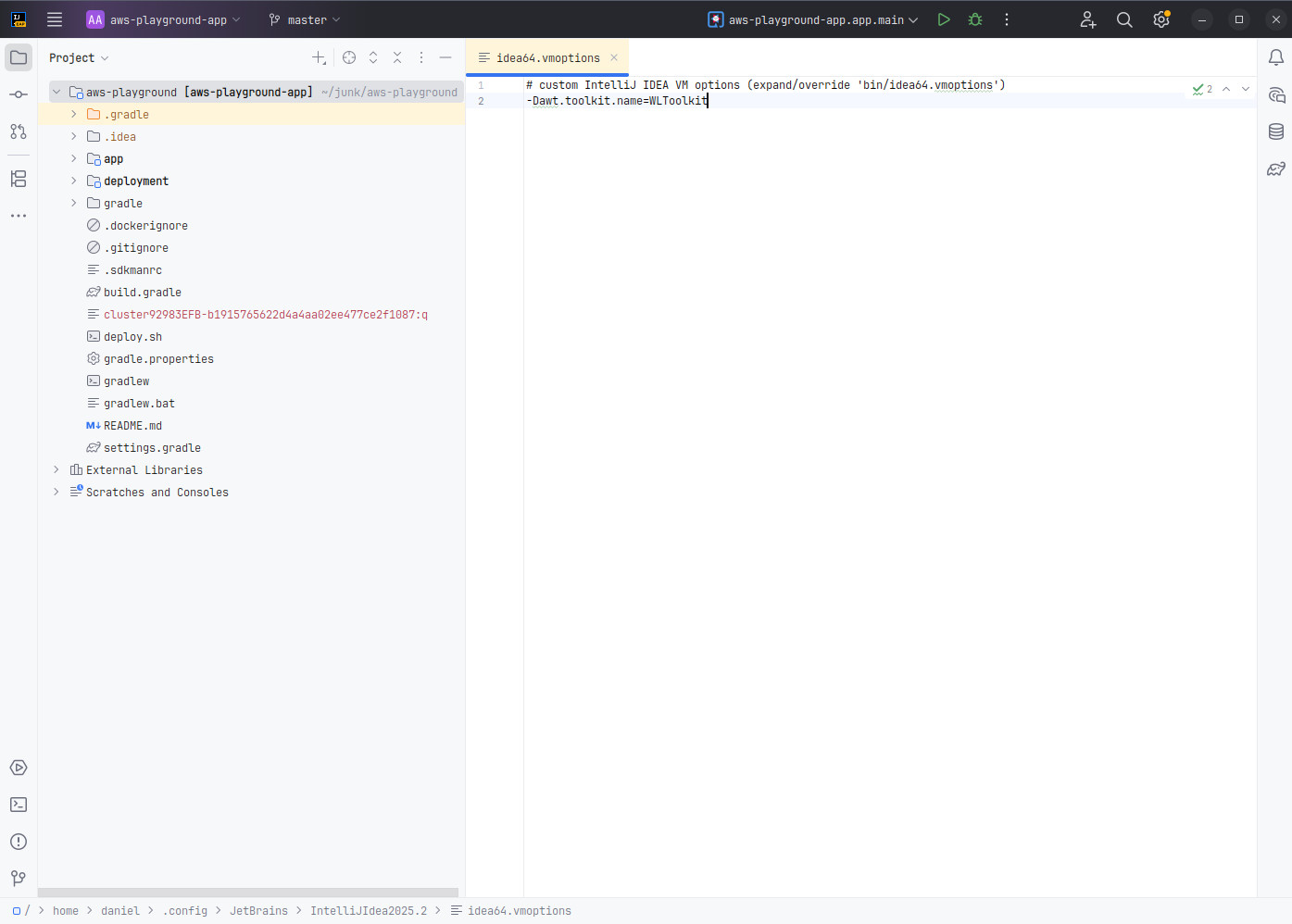

# custom IntelliJ IDEA VM options (expand/override 'bin/idea64.vmoptions') -Dawt.toolkit.name=WLToolkit

Blog

Window decoration for IntelliJ Idea in WSL

29 June 2025

You can thank me later but first go to Help → Edit custom VM Options.. and add:

The issue on the Jetbrains issue-tracker is this.

Running a local LLM on Ollama and LangChain4J

01 January 2024

When working on my personal projects, I see myself quiet often reaching out to ChatGPT for a quick explaination or tip. Unfortunately, this is not possible/allowed in most corporate environments. That’s why I was naturally very interested in what it takes to run a Large Language Model (LLM) locally on my machine and maybe even enrich it with some domain knowledge and see if it can help in my daytime job for some use-case (either by fine-tuning wich is harder or by retrieval augmented generation (RAG)).

The AI journey for me just started, that’s why the goal of this post is to show how easy it is to run an LLM locally and access it from a Java-based application leveraging LangChain4J. As everyone has some favorite tools in his belt, it is natural to use them. That’s why my code example below is a self-contained JBang script that is leverarging Quarkus and it’s LangChain4J extension. You can just as easily cut Quarkus out of the picture and use LangChain4J directly, but I was especially interested in the state of the Quarkus Integration for LangChain4J.

So, as you might have understood by now, LangChain4J is a big part of what allows you to access an LLM. What is important to understand here, is that it is only an abstraction to program against different AI services. LangChain4J does not actually run/host an LLM. For that we will need another service that runs the LLM and exposes it so LangChain4J can access it. As a matter of fact, LangChain4J can integrate with OpenAI’s GPT models as they expose a Restful API for it. In a similar fashion we can run an LLM locally with Ollama and configure the exposed Restful endpoint for LangChain4J to use. As this is just the beginning of the journey for me, I cannot explain to you what it would take to run/host an LLM in Java natively. For sure it must be technically possible, but then again, what is the big benefit?

Installing Ollama

So, first step is to install Ollama. I ran it under WSL on a Windows machine and the steps you can find here are as simple as they get:

curl https://ollama.ai/install.sh | sh

After this you need to download a model and then can interact with it via the commandline. If you have an already rather old Graphics cards like an NVidia RTX 2060 (with 6 GB VRAM), you can run a mid-sized model like Mistral 7b without problems on your GPU alone.

Run ollama run mistral which will download the model and then start a prompt to interact with it. The download is 4 GB, so it might take a few minutes depending on your internet speed.

If you feel like your PC is not capable of running this model, maybe try orca-mini instead and run ollama run orca-mini:3b.

Generally, the models should be capable to run on a compatible GPU or fall back to running on the CPU. In case of running on the CPU, you will need a corresponding amount of RAM to load it.

Ollama will install as a service and expose a Restful API on port 11434. So, instead of using the command prompt you can also try to hit it via curl for a first test:

curl -i -X POST http://127.0.0.1:11434/api/generate -d '{"model": "mistral", "prompt": "Why is the sky blue?"}'

Note that you have to provide your model that you download before as the model parameter.

Quarkus and Langchain4J

If this is working we can come to the next step and use the LLM from within our Java application. For that, we need the LangChain4J library which can talk to our Ollama service. Also, as I am a big fan of JBang and Quarkus, these were my natural choice for integrating with LangChain4J. But you can just as well use Langchain4J directly without any framework. See this test for the most basic integration between Langchain4J and Ollama.

Now lets come to this self-contained JBang script that will interact with the Ollama-based LLM:

///usr/bin/env jbang "$0" "$@" ; exit $?

//DEPS io.quarkus.platform:quarkus-bom:3.6.4@pom

//DEPS io.quarkus:quarkus-picocli

//DEPS io.quarkus:quarkus-arc

//DEPS io.quarkiverse.langchain4j:quarkus-langchain4j-ollama:0.5.1 (1)

//JAVAC_OPTIONS -parameters

//JAVA_OPTIONS -Djava.util.logging.manager=org.jboss.logmanager.LogManager

//Q:CONFIG quarkus.banner.enabled=false

//Q:CONFIG quarkus.log.level=WARN

//Q:CONFIG quarkus.log.category."dev.langchain4j".level=DEBUG

//Q:CONFIG quarkus.langchain4j.ollama.chat-model.model-id=mistral (2)

import static java.lang.System.out;

import com.fasterxml.jackson.annotation.JsonCreator;

import dev.langchain4j.service.SystemMessage;

import dev.langchain4j.service.UserMessage;

import io.quarkiverse.langchain4j.RegisterAiService;

import jakarta.enterprise.context.control.ActivateRequestContext;

import jakarta.inject.Inject;

import picocli.CommandLine;

@CommandLine.Command

public class QuarkusLangchainOllama implements Runnable {

@Inject

TriageService triage;

@Override

@ActivateRequestContext (3)

public void run() {

String review = "I really love this bank. Not!";

out.println("Review: " + review);

out.println("...");

TriagedReview result = triage.triage(review);

out.println("Sentiment: " + result.evaluation());

out.println("Message: " + result.message());

}

}

@RegisterAiService

interface TriageService {

@SystemMessage("""

You are working for a bank, processing reviews about

financial products. Triage reviews into positive and

negative ones, responding with a JSON document.

"""

)

@UserMessage("""

Your task is to process the review delimited by ---.

Apply sentiment analysis to the review to determine

if it is positive or negative, considering various languages.

For example:

- `I love your bank, you are the best!` is a 'POSITIVE' review

- `J'adore votre banque` is a 'POSITIVE' review

- `I hate your bank, you are the worst!` is a 'NEGATIVE' review

Respond with a JSON document containing:

- the 'evaluation' key set to 'POSITIVE' if the review is

positive, 'NEGATIVE' otherwise

- the 'message' key set to a message thanking or apologizing

to the customer. These messages must be polite and match the

review's language.

---

{review}

---

""")

TriagedReview triage(String review);

}

record TriagedReview(Evaluation evaluation, String message) {

@JsonCreator

public TriagedReview {}

}

enum Evaluation {

POSITIVE,

NEGATIVE

}-

The required dependency to interact with Ollama.

-

The model needs to be configured as this is needed for the

modelparameter in the Restful request to Ollama. -

Without this, I got an error that RequestScope is not initalized. But the error-message from Quarkus was very helpful and directly gave me the solution.

You can find the source-code/the JBang script here.

I don’t want to explain the main code that much as I just took the example from this awesome LangChain4J post by the Quarkus guys and you can read about it over there, but I think there is one quiet awesome fact that needs to be pointed out about it:

In the prompt we are telling the LLM to return a JSON structure with specific key names. Based on this, we are setting up our JSON-serializable POJOs named TriageReview and Evaluation.

In case the LLM returns a correct JSON structure (which the Mistral model did for me), Quarkus can deserialize it into an instance of TriagedReview. So, even though LLMs are widely seen as chat bots and usally return human-readable text, it is not limited to this.

There is no need to do any kind of manual parsing of the responses. As it is directly returning JSON, it is just as if you were calling an Restful endpoint via an OpenAI specification.

As I was saying before, LangChain4J offers an abstraction over different AI services. You could have skipped the setup of Ollama completly and just tried it out with OpenAI’s GPT-3 or GPT-4. The main difference would have just been to change the dependency from io.quarkiverse.langchain4j:quarkus-langchain4j-ollama:0.5.1 to io.quarkiverse.langchain4j:quarkus-langchain4j-openai:0.5.1.

The last thing to do is to run the script via the JBang CLI. It should rate the sentiment of the given comment as negative in case it works as expected.

jbang run --quiet QuarkusLangchainOllama.java

Have fun with it.

Ramblings in AI

21 November 2023

As I am progressing with my learnings in AI, I wanted to have a way to keep a diary. Though JBake is great for my main Java-based blog, it is not perfect for documenting in the Python realm. Here, Jupyter notebooks are king. For that reason, I set up a seperate page to document my ramblings in Python and AI under https://dplatz.de/aiblog/).

Deploying a Quarkus application using sticky sessions to AWS EKS using AWS CDK

17 September 2023

JSF applications rely on sticky sessions. This means, the server-side JVM maintains state (usally in memory) for a particular user/client. For this, each request needs to be routed to the same JVM; in Kubernetes language: to the same pod. The relation between client and server is achieved by sending a session-cookie to the browser. The browser sends this cookie to the server in every request. Now the infrastructure in between needs to be set up so it recognizes this cookie (it needs to be aware about this cookie / the name of the cookie) and routes / pins the request appropriately to the same pod.

I wanted to see how exactly this needs to be set up in the context of AWS Elastic Kubernetes Service (EKS). For that I created this Github repository. It uses AWS' Java CDK to deploy the infrastructure (Elastic Container Registry, Elastic Kubernetes Service) and then deploys a simple Quarkus application that helped me verify the correct handling of the cookie / the stickyness.

The only step to run is ./deploy.sh all. This will provision the AWS infrastructure and then deploy the application assuming you have used AWS on your system before and have valid AWS credentials configured.

Running kubectl get pods -o wide you should see that because we have provisioned two EC2 nodes as part of the Kubernetes cluster that the pods are running on different nodes.

The output of the deploy.sh should have given you the public endpoint that was provisioned (Access @ http://<aws-public-endpoint>/hello should have been printed). Accessing this endpoing will print all environment variables of the pod. If you run http://<aws-public-endpoint>/hello?var=HOSTNAME it will print only the hostname of the pod. You should see that on each request you get a different pod due to the load balancer.

If you access http://<aws-public-endpoint>/hello/session instead, you should see that it should connect to the same pod each time because a cookie gets used.

The cookie name that is created in the code (https://github.com/38leinaD/aws-playground/blob/master/app/src/main/java/de/dplatz/TestResource.java#L29) needs to match the configuration of the Application Loadbalancer (ALB) in the ingress configuration (https://github.com/38leinaD/aws-playground/blob/master/deployment/k8s/services.yaml#L65; see stickiness.app_cookie.cookie_name=mycookie).

Please note that for the ingress to work properly, the ALB controller needs to be configured as part of provisioning the EKS cluster:

Cluster eksCluster = Cluster.Builder.create(this,"eks-cluster")

.vpc(vpc)

.vpcSubnets(List.of(

SubnetSelection.builder().subnetType(SubnetType.PUBLIC).build(),

SubnetSelection.builder().subnetType(SubnetType.PUBLIC).build(),

SubnetSelection.builder().subnetType(SubnetType.PUBLIC).build()))

.defaultCapacity(2)

.defaultCapacityInstance(InstanceType.of(InstanceClass.T3, InstanceSize.SMALL))

.defaultCapacityType(DefaultCapacityType.EC2)

.mastersRole(clusterAdminRole)

.albController(AlbControllerOptions.builder() (1)

.version(AlbControllerVersion.V2_5_1)

.build())

.version(KubernetesVersion.V1_27).build();-

ALB controller required by the ingress

See here for the full CDK stack.

Don’t forget to run ./deploy.sh destroy at the end to shut everything down again.

Minimalist Webstandards with rollup.js

16 October 2022

I have been a long-time fan of what once was called Pika and now is called Snowpack.

Basically, it was the revolution how JavaScript web-apps are built. Instead of requiring a custom dev-server and doing a lot of "bundler magic" behind the scenes (basically every framework out there like Angular, Vue, etc. using Webpack), it just processed the Node dependencies and converted them into standard ES6 modules. What you could do now is reference this standard ES6 modules from your App without the need of a special build step on your application or custom dev-server. Modern browser can process imports like import { html, LitElement } from './lib/lit-element.js';. Just copy your HTML, standard/vanilla JS on a plain web-server (or use a generic tool like browser-sync) and way you go. You can read more about the general approach in one of my previous posts.

To me this approach always felt very natural, intuitive and did not introduce too much dependency on complex tools that lock you in. With Snowpack 3, I am getting the same vibe now like previously with Webpack. It has become a complex tool (includes bundeling, minification, etc.) that requires you to now use it’s own dev-server.

For this reason, I have now moved back to a lower-level tool which is called rollup.js. With rollup.js, we can convert Node dependency into standard ES6 modules. Nothing more and nothing less. You can find the full example project on GitHub.

The main parts are the package.json with dependecy to rollup and the webDependencies section that I have kept analogous to how Pika/Snowpack have it:

{

"name": "webstandards-starter",

"version": "1.0.0",

"description": "Starter project for web-development using the web's latest standards.",

"main": "src/AppMain.js",

"scripts": {

"postinstall": "rollup -c", (1)

"start": "browser-sync src -f src --single --cors --no-notify --single"

},

"repository": {

"type": "git",

"url": "git+https://github.com/38leinaD/webstandards-starter.git"

},

"author": "",

"license": "ISC",

"bugs": {

"url": "https://github.com/38leinaD/webstandards-starter/issues"

},

"homepage": "https://github.com/38leinaD/webstandards-starter#readme",

"devDependencies": {

"browser-sync": "^2.27.10",

"rollup": "^3.2.1", (2)

"@rollup/plugin-node-resolve": "^15.0.0"

},

"dependencies": {

"@vaadin/router": "^1.7.4",

"lit-element": "^3.2.2"

},

"rollup": {

"webDependencies": [ (3)

"@vaadin/router/dist/vaadin-router.js",

"lit-element/lit-element.js",

"lit-html/directives/async-append.js",

"lit-html/directives/async-replace.js",

"lit-html/directives/cache.js",

"lit-html/directives/class-map.js",

"lit-html/directives/guard.js",

"lit-html/directives/if-defined.js",

"lit-html/directives/repeat.js",

"lit-html/directives/style-map.js",

"lit-html/directives/unsafe-html.js",

"lit-html/directives/until.js"

]

}

}-

postinstallruns rollup when executingnpm install -

devDependency to rollup and rollup plugin

-

Similar

webDependenciesconfiguration as known from Pika/Snowpack

You can see that I added a postinstall step executing rollup -c. What this will do is call rollup on npm install and use the rollup.config.mjs file which looks like this:

import { nodeResolve} from '@rollup/plugin-node-resolve';

import * as fs from 'fs';

import * as path from 'path';

function outDir(relPath) {

const nodeModulesPath = `./node_modules/${relPath}`

const parentDir = path.dirname(relPath)

// Just some basic logic how to generated output-paths under src/lib

if (`${path.basename(parentDir)}.js` === path.basename(relPath)) {

// lit-element/lit-element.js is simplified to 'src/lib/lit-element.js'

return path.dirname(parentDir)

}

else {

return path.dirname(relPath)

}

}

export default JSON.parse(fs.readFileSync('package.json', 'utf8')).rollup.webDependencies.map(relPath => {

console.log("Processing:", relPath)

const nodeModulesPath = `./node_modules/${relPath}`

return {

input: [

nodeModulesPath

],

output: {

dir: 'src/lib/' + outDir(relPath),

format: 'esm',

},

plugins: [nodeResolve({

browser: true

})]

};

});What this does is the bare minimum of what Pika and Snowpack are also doing: Process each of the elements in webDependencies and convert the dependency into a standard ES6 module. The ES6 module is created under src/lib and allows for easy referencing via import from the application. After running the install-step, you can copy the app to any standard web-server; or use browser-sync for that matter.

I am not saying that this is the way to go for bigger commerical projects, but to me this makes for a simple and understandable setup that at least serves me well for learning purposes and personal projects. Eventually, most libraries/dependencies will come out of the box as modules and the rollup step can be eliminated completely.

AWS RDS with IAM Auth

06 August 2022

When looking up things in the offical AWS Docs, code examples often still refer to AWS SDK version 1. Whereas the latest version of the SDK is version 2 and completly different API-wise. Same so the other day when I needed to find out how to generate an IAM token to access the AWS RDS Aurora database.

Digging through a Github issue and a pull-request lead me to the solution:

import software.amazon.awssdk.auth.credentials.DefaultCredentialsProvider;

import software.amazon.awssdk.regions.Region;

import software.amazon.awssdk.services.rds.RdsUtilities;

import software.amazon.awssdk.services.rds.model.GenerateAuthenticationTokenRequest;

public class IamTokenGenerator {

public static String retrieveIamToken(String hostname, int port, String username) {

RdsUtilities rdsUtilities = RdsUtilities.builder()

.credentialsProvider(DefaultCredentialsProvider.create())

.region(Region.EU_CENTRAL_1)

.build();

GenerateAuthenticationTokenRequest tokenRequest = GenerateAuthenticationTokenRequest.builder()

.credentialsProvider(DefaultCredentialsProvider.create())

.region(Region.EU_CENTRAL_1)

.hostname(hostname)

.port(port)

.username(username)

.build();

return rdsUtilities.generateAuthenticationToken(tokenRequest);

}

}The following dependency is needed (RdsUtilities was only introduced in 2.16.3!):

implementation 'software.amazon.awssdk:rds:2.16.3'

Maybe this can save someone a few minutes.

Quarkus - OIDC - Offering a Login button

02 April 2021

Quarkus offers an integration with OpenIdConnect (OIDC). This means, you can use indentity providers like Keycloak, ForgeRock or AWS Cognito to delegate your authentication needs. With Keycloak, you can also have identity brokering with other identity providers. This means, people can sign up with your application/service via Keycloak directly or people can also select an option like "Login with GitHub".

For the general usage of OIDC with Quarkus, please refer to this guide. My post is about the specific need of offering a Login-button in your application; which I would have thought to be an out of the box feature. Don’t get me wrong; this is not hard to achieve, but also not trivial and well documented.

My general setup is a Quarkus application with server-side-rendered Web frontend. This may be JSF (the Quarkus Universe offers a MyFaces extension), but for me it was using the more lightweight Qute template library; which feels more like Spring MVC with Thymeleaf.

Problem Statement

So, what exactly do we want? If a user is not logged in, there should be a Login-button. Once this button is pressed, the user should be redirected to the identity provider’s login page. Once the login is done, I would like to redirect the user to same URL he was coming from. I.e. the user might be browsing a specific page or product in the catalog and decides to log in.

The thing with offering a login button is that there is no URL to the login page. The redirects to the identity provider happen in Quarkus internally by intercepting the requests and checking if a URL is protected or not. If a URL is protected and there is not valid Access Token already exchanged, the login is triggered. Also, in my case, most pages are not really protected but can be accessed by a unauthenticated as well as by a logged in user. The difference is just what actions are possible on the page.

This is the basic configuration for OIDC with Keycloak as my identity provider. You see, that quarkus.http.auth.permission.permit1 gives full access all URLs also users that are not logged in.

quarkus.oidc.enabled=true quarkus.oidc.auth-server-url=http://localhost:8180/auth/realms/quarkus quarkus.oidc.client-id=frontend quarkus.oidc.application-type=web_app quarkus.oidc.logout.path=/logout quarkus.oidc.logout.post-logout-path=/ quarkus.oidc.token.refresh-expired=true quarkus.oidc.authentication.session-age-extension=30M quarkus.http.auth.permission.permit1.paths=/* quarkus.http.auth.permission.permit1.policy=permit quarkus.http.auth.permission.permit1.methods=GET,POST

Solution

The way to offer a login button is by registering a URL/endpoint that is actually protected:

quarkus.http.auth.permission.authenticated.paths=/login quarkus.http.auth.permission.authenticated.policy=authenticated

This URL is not provided by Quarkus but needs to be provided by ourselfs:

@Path("/")

public class IndexResource {

// Other methods...

@GET

@Path("login")

public Response login(@QueryParam("redirect") String redirect) {

return Response.temporaryRedirect(URI.create(redirect)).build();

}

}On my HTML page (Qute template), I offer a login button like this:

<a class="button is-light" href="javascript:location.href='/login?redirect=' + encodeURIComponent(location.href)">

Login

</a>How exactly does this work when the user presses the Login button?

The Login button will send a GET request for the page /login?redirect=…. The GET request contains a redirect=… query parameter with the URL of the currently open page. The redirect is so after the login we can get back to this page.

Quarkus will notice from the config quarkus.http.auth.permission.permit1 that /login is protected. If the user is not logged in, we will be redirected to the Keycloak login page. Once the login is done, Keycloak will redirect to the /login page. This will invoke our IndexResource.login method, where we will again redirect to the redirect parameter URL; bringing us back to the initial page the user pressed the Login button on. He is now logged in.

I hope the process is clear and it helps others to implement the same flow. To me, it looked like this is not very well documented and it felt to me like I had to come up with this solution myself and get confirmation that this was indeed the right approach.

AWS CDK & JBang

01 March 2021

I am a big of AWS and the services it offers. What I am not a big fan of, is CloudFormation; in the sense that I don’t like to write huge YAML files to define my AWS resources. An alternative approach is to use a tool like Ansible, where learning it, at least can be used also for other Cloud providers like Azure. But still, as a Java Developer, I don’t feel comforable writing extensive/large YAML or JSON files.

Meet the AWS Cloud Development Kit (CDK), which essentially allows you to define your AWS resources by writing Java code.

CDK comes with a Node-based commandline, so you will first have to install Node 10+; now, install the aws-cdk CLI tool:

sudo npm install -g aws-cdk

Maven

What you could be doing now, is scaffold a Maven project and use it to define your recourses in Java code. Within an empty directory for your project run:

cdk init app --language=java

You can now import this into your IDE of choice, define resource in Java and then deploy it using cdk deploy assuming you have a default profile for AWS set up on your system/user (check ~/.aws/credentials).

This is already quiet nice and I can recommand you to have a look at the Video series by rickpil and the great CDK API reference.

JBang

What is even cooler, is that we can use it with JBang as well.

If you take a look at the Maven project, it is just a regular project without any specific plugins. The only thing that makes it work and ties it to the cdk CLI tool, is the cdk.json in the root folder. It contains an app parameter which gives it a command to run the application (mvn -e -q compile exec:java). Actually, what is happening, is that the Java application will produce a CloudFormation template, which is than feed to AWS.

So, what we need for a minimalist AWS deployment script using JBang, is the below two files only.

///usr/bin/env jbang "$0" "$@" ; exit $?

//DEPS software.amazon.awscdk:core:1.91.0

//DEPS software.amazon.awscdk:s3:1.91.0

import software.amazon.awscdk.core.App;

import software.amazon.awscdk.core.Construct;

import software.amazon.awscdk.core.Stack;

import software.amazon.awscdk.core.StackProps;

import software.amazon.awscdk.services.s3.Bucket;

import static java.lang.System.*;

import java.util.Arrays;

public class awsdeployment extends Stack {

public static void main(final String[] args) {

App app = new App();

new awsdeployment(app, "AwsCdkTestStack");

app.synth();

}

public awsdeployment(final Construct scope, final String id) {

this(scope, id, null);

}

public awsdeployment(final Construct scope, final String id, final StackProps props) {

super(scope, id, props);

// Create an S3 bucket

new Bucket(this, "MyBucket");

// Create other resources...

}

}{

"app": "jbang awsdeployment.java",

"context": {

"@aws-cdk/core:enableStackNameDuplicates": "true",

"aws-cdk:enableDiffNoFail": "true",

"@aws-cdk/core:stackRelativeExports": "true",

"@aws-cdk/aws-ecr-assets:dockerIgnoreSupport": true,

"@aws-cdk/aws-secretsmanager:parseOwnedSecretName": true,

"@aws-cdk/aws-kms:defaultKeyPolicies": true,

"@aws-cdk/aws-s3:grantWriteWithoutAcl": true

}

}When you run cdk deploy, it should deploy an S3 bucket named "MyBucket" to AWS.

Firestarter - A fireplace with JBang and Quarkus

17 February 2021

My dad built us a "fake fireplace" as decoration some time ago. Actually, it was a gift for my wife’s birthday. After beeing placed in the hall for some time, it finally found the perfect spot in our living room. With its new spot also came a power outlet right behind it. We thought it would be nice if the fireplace actually would be even "more fake"; so I got a spare Monitor, a Raspberry Pi and added a few lines of Java using Quarkus and JBang.

Firestarter is the final result. Essentially, it opens a browser in fullscreen and plays whichever YouTube clip you have configured. As I do not want to ask my wife to connect to the Raspberry Pi via SSH to change the clip, I added a small web-interface that can be easily opened on the phone.

Installation

First, check via java -version if you have JDK 11 installed on the Raspberry Pi. If not, run

sudo apt install default-jdk

The simplest way to use Firestarter (which uses JBang) on the Raspberry Pi is via the zero-installation approach:

curl -Ls https://sh.jbang.dev | bash -s - firestarter@38leinaD

Autostart

If you would like to have Firestarter autostart after booting to the desktop, you just have to place below file in ~/.config/autostart/

[Desktop Entry] Type=Application Name=firestarter uomment=Starts the firestarter app on startup Exec=/bin/bash -c "sleep 10 && curl -Ls https://sh.jbang.dev | bash -s - firestarter@38leinaD" NotShowIn=GNOME;KDE;XFCE;

The sleep is just to wait a few seconds after boot so the Wifi is connected. Otherwise, the curl might fail. There are more reliable alternatives but it is good enough for me.

Move mouse

I had the problem that the mouse was placed on the taskbar after boot. That position is over the YouTube clip in Chrome later on and thus title and controls will stay visible.

To move the mouse out of the way, I used the answer to this stackoverflow question and removed @point-rpi from /home/pi/.config/lxsession/LXDE-pi/autostart.

Changing the Fireplace Video

Open http://pi.local:8080/firestarter (or whatever is the name/IP of your Raspberry Pi on the local network) from any Browser in your local network and paste the Url of a YouTube clip.

This one is especially nice if you are a fan of pixel-art: A Pixel Fireplace.

JBang Installation on Rasperry Pi

Just as a side-note: JBang is just a great tool for writing small scripts with Java. There are multiple ways to use/install JBang on the Raspberry Pi. One possibility is to install it via Snap. Unfortunately, it does not have the latest version of JBang currently (see here). So, firestarter will not work with the JBang version from Snap currently. Once the JBang version on Snap is updated, you could use it like this as well:

sudo snap install jbang --classic jbang run firestarter@38leinaD

|

Note

|

Update: As of now, Snap hosts JBang 0.66.1 which is the latest version and is working with firestarter as well. |

Quarkus - StdOut to Log

14 November 2020

Quarkus uses JBoss Logging as it’s default logging implementation but you will not see System.out.println calls gettings routed to a logger.

From from JBoss/Wildfly, I am used to this and thus these calls end up in the log-file.

This is currently not done in Quarkus (see this issue.

If you enable logging to a file via quarkus.log.file.enable=true in your application.properties, you will not see these calls in your log-file.

Below is a simple class you can use to route all System.out.println calls in Quarkus to the logging system.

import java.io.OutputStream;

import java.io.PrintStream;

import org.jboss.logging.Logger;

import org.jboss.logging.Logger.Level;

public class JBossLoggingOutputStream extends OutputStream {

private final Logger logger;

private final Level level;

private final StringBuffer buffer = new StringBuffer();

public JBossLoggingOutputStream (Logger logger, Level level) {

this.logger = logger;

this.level = level;

}

public void write (int b) {

byte[] bytes = new byte[1];

bytes[0] = (byte) (b & 0xff);

String str = new String(bytes);

if (str.equals("\n")) {

flush ();

}

else {

buffer.append(str);

}

}

public void flush () {

logger.log (level, buffer);

buffer.setLength(0);

}

public static PrintStream createPrintStream(Logger logger, Level level) {

return new PrintStream(new JBossLoggingOutputStream(logger, level));

}

}You should activate this class early on in your application. For example, by observing the StartupEvent.

@ApplicationScoped

public class Startup {

void onStart(@Observes StartupEvent ev) {

System.setOut(JBossLoggingOutputStream.createPrintStream(Logger.getLogger("io.quarkus"), Level.INFO));

System.out.println("Application started.")

}

}You should see that the text Application started is shown in the console output with proper timestamp and thread information. Also, it ends up in your log-file if you have configured it properly.

Access of Jars from Gradle cache

25 September 2020

There are times where you quickly need the path to a JAR-file from the Gradle cache. For Maven this is quiet straight-forward as the path of a file in the local Maven cache (~/.m2/repository) is determined alone by the GAV coordinates.

This is not the case for Gradle. Files are located under ~/.gradle/caches but the folder-names look like they are hash-values and the only way I know how to get the path of a JAR-file is by actually running a Gradle build-script that downloads and resolves the dependency.

For this reason, I now have a small shell-script that does it exactly that:

#!/bin/bash

# gradle-resolve.sh

tmp_dir=$(mktemp -d)

cat << EOF > $tmp_dir/build.gradle

plugins {

id 'java'

}

repositories {

jcenter()

}

dependencies {

implementation "$2"

}

tasks.register("getClasspath") {

doLast {

println configurations.runtimeClasspath.join(':')

}

}

tasks.register("getJar") {

doLast {

println configurations.runtimeClasspath[0]

}

}

EOF

(cd $tmp_dir && gradle $1 --console=plain --quiet)It can be invoked with getJar to get the path of the JAR in the Gradle cache:

gradle-resolve.sh getJar org.jboss:jandex:2.0.5.Final /home/daniel/.gradle/caches/modules-2/files-2.1/org.jboss/jandex/2.0.5.Final/7060f67764565b9ee9d467e3ed0cb8a9c601b23a/jandex-2.0.5.Final.jar

Or it can be invoked with getClasspath to get the whole runtime-classpath.

gradle-resolve.sh getClasspath org.eclipse.jetty:jetty-server:9.4.29.v20200521 /home/daniel/.gradle/caches/modules-2/files-2.1/org.eclipse.jetty/jetty-server/9.4.29.v20200521/2c6590067589a0730223416c3157b1d4d121b95b/jetty-server-9.4.29.v20200521.jar:/home/daniel/.gradle/caches/modules-2/files-2.1/javax.servlet/javax.servlet-api/3.1.0/3cd63d075497751784b2fa84be59432f4905bf7c/javax.servlet-api-3.1.0.jar:/home/daniel/.gradle/caches/modules-2/files-2.1/org.eclipse.jetty/jetty-http/9.4.29.v20200521/21b761eae53b8e5201fb8fdf03b9865116a29b47/jetty-http-9.4.29.v20200521.jar:/home/daniel/.gradle/caches/modules-2/files-2.1/org.eclipse.jetty/jetty-io/9.4.29.v20200521/ffadd07dc4e9d0783531922ed565b667ad95766e/jetty-io-9.4.29.v20200521.jar:/home/daniel/.gradle/caches/modules-2/files-2.1/org.eclipse.jetty/jetty-util/9.4.29.v20200521/4866aa5271465f1d9035c4726209e4926fe1599c/jetty-util-9.4.29.v20200521.jar

So, to run the Main-Class from the jandex jar, you can execute:

java -jar $(gradle-resolve.sh getJar org.jboss:jandex:2.0.5.Final)

Quarkus - Custom CDI Scopes

11 September 2020

Quarkus provides no full CDI implementation and as such, no support for CDI extension. This is because CDI extensions are inherently runtime-based and thus do not fit into Quarkus' model of doing as much as possible during build-time. No support for CDI extensions also means no standard support for registration of custom CDI scopes.

Well, it sounds like quiet a limitation, but actually Arc (Quarkus' CDI implementation) provides an API to register custom scopes. And as you will see, implementing a custom scope is 99% the same as you know it from standard CDI.

In this post, I will show the code for a simple custom scope that that is local to the current thread; i.e. the context keeps track of thread-local state.

Annotation

The scope is called CallScoped and that is also the the name of the annotation:

@Documented

@NormalScope

@Inherited

@Retention(RetentionPolicy.RUNTIME)

@Target({ElementType.TYPE, ElementType.METHOD, ElementType.FIELD})

public @interface CallScoped {}Context

The context-class, which contains the main login of any custom scope, I will not put here in it’s entirety but only describe what is different to a standard CDI context. You can find the CallScopeContext here.

public class CallScopeContext implements InjectableContext {

static final ThreadLocal<Map<Contextual<?>, ContextInstanceHandle<?>>> ACTIVE_SCOPE_ON_THREAD = new ThreadLocal<>();

//...

}The context-class needs to implement InjectableContext which is Quarkus specific, but extends from the standard AlterableContext. So, there are only two additional methods to implement: destroy and getState. The first is to destroy the active scope entirely; and the second allows to capture and browse the state of the context. E.g. it enables this dev-mode feature.

@Override

public void destroy() {

Map<Contextual<?>, ContextInstanceHandle<?>> context = ACTIVE_SCOPE_ON_THREAD.get();

if (context == null) {

throw new ContextNotActiveException();

}

context.values().forEach(ContextInstanceHandle::destroy);

}

@Override

public ContextState getState() {

return new ContextState() {

@Override

public Map<InjectableBean<?>, Object> getContextualInstances() {

Map<Contextual<?>, ContextInstanceHandle<?>> activeScope = ACTIVE_SCOPE_ON_THREAD.get();

if (activeScope != null) {

return activeScope.values().stream()

.collect(Collectors.toMap(ContextInstanceHandle::getBean, ContextInstanceHandle::get));

}

return Collections.emptyMap();

}

};

}Registration

The registration of the custom scope and context happens during built-time in a @BuildStep.

public class ApplicationExtensionProcessor {

@BuildStep

public void transactionContext(

BuildProducer<ContextRegistrarBuildItem> contextRegistry) {

contextRegistry.produce(new ContextRegistrarBuildItem(new ContextRegistrar() {

@Override

public void register(RegistrationContext registrationContext) {

registrationContext.configure(CallScoped.class).normal().contextClass(CallScopeContext.class) // it needs to be of type InjectableContext...

.done();

}

}, CallScoped.class));

}

}There is one slight difference to a standard CDI context. As you see, the context-class is registered during build-time by just giving the type. With CDI and a CDI extension, you would provide an instance to CDI. This way, you can create and share a single reference to your context with the CDI implementation and the application-side. I.e. for our CallScoped, the CallScopeContext offers an API to the application to start a scope on the current thread via enter and exit methods (see here).

Currently, this is a limitation of Quarkus as there is no possibility to share a single instance or access the runtime instance. But because state is usually stored in statics or thread-local, there is no problem in having actually two instances of the context-class; one used by Quarkus internally, one by the application-side. But support for this is already under consideration.

You can find the full code example here. It’s on a branch of my quarkus-sandbox repo which is a good starting point if you want to experiment with Quarkus + Quarkus Extensions (using Gradle).

jitpack.io for Gradle Plugins

29 June 2020

I extensively use the gradle-eclipse-compiler-plugin. This is a Gradle plugin that allows me to use the Eclipse JDT compiler for my Gradle builds instead of standard javac of my installed JDK.

Why is this useful? Because when I deploy e.g. a WAR file built with Gradle to an app-server and want to do remote-debugging and also hot-swap code in the debug session from my IDE, it is better to use the same compiler for both IDE and Gradle.

Otherwise this causes problems where constructs like lambda expression are compiled differently and the debug-session will not be able to swap the code; e.g. saying that methods where added or removed.

But this post is not about the usefulness of the plugin itself, but rather that it stopped working for me with Gradle 6 and I quickly wanted a fix that I can also distribute to other people. Obviously, I filed an issue and made a pull-request; but until the pull-request is merged how provide the fix to others? Meet jitpack.io which provides a maven repository for all of Github. You can request artifacts from this repository and what it will do is check out the code from GitHub and build it on the fly. You can use the version to reference specific branches or commits.

So, to use my fix/PR, I had to add the following to my Gradle project:

buildscript {

repositories {

maven { url 'https://jitpack.io' }

}

dependencies {

classpath group: 'com.github.38leinaD', name: 'gradle-eclipse-compiler-plugin', version: 'fix~unrecognized-option-SNAPSHOT'

}

}

apply plugin: 'de.set.ecj'Async Profiler for Java

02 May 2020

Async-profiler is a low overhead sampling profiler for Java that produces nice flamegraphs to quickly see where CPU-cycles are eaten (event=cpu). It’s nice that it also shows where cycles are eaten in native code and is not biased twoards your application byte-code.

It also allows to analyze heap allocations (event=alloc).

You can either attach it to an already running Java application or use an agent to to attach it on startup.

Attach via agent on startup

java -agentpath:/home/daniel/tools/async-profiler-1.7-linux-x64/build/libasyncProfiler.so=start,event=cpu,file=/tmp/profile-cpu.svg,interval=1000000,framebuf=2000000,simple -jar target/myapp.jar

Attach to already running process

profiler.sh -e cpu -f /tmp/profile-cpu.svg -i 1000000 -b 2000000 -s <process-id>

Quarkus - Building Native Images on Windows

26 April 2020

I have been having trouble in the past to build native images for Quarkus applications under Windows due to a chain of issues.

With Quarkus 1.3.2.Final, I can finally confirm that I am sucessfully able to build. See below for the steps and exact environment used.

Steps

As I don’t have a Windows system, I downloaded the VirtualBox image of Windows 10 from Microsoft.

Within the VM, I installed GraalVM 2.0.0 for Java 11. See here for the latest releases.

I extracted GraalVM and from within the bin folder I ran gu install native-image to install the native-image tool.

I also set up PATH, GRAALVM_HOME and JAVA_HOME to point to the GraalVM folder. Well, PATH obviously to the bin folder.

Now, I installed Visual Studio 2019 as it is required for the native compilation. (the description on the GraalVM page is only very high-level)

Just because it is easier to describe and provide commands, I first installed Chocolatey which is a package manager for Windows.

After this, you should be able to install Visual Studio from your Powershell (as Admin) like this:

choco install visualstudio2019-workload-vctoolsAfter this, you should find this file on your filesystem:

C:\Program Files (x86)\Microsoft Visual Studio\2019\BuildTools\VC\Auxiliary\Build\vcvars64.batFinally, run your native-image build from a Command-prompt. Note that you have to call the vcvars64.bat to have the proper build environment:

call "C:\Program Files (x86)\Microsoft Visual Studio\2019\BuildTools\VC\Auxiliary\Build\vcvars64.bat"

mvnw package -Pnative -DskipTestsQuarkus - Testing against the latest code on master

13 April 2020

Quarkus is changing quickly. If you don’t want to wait for the next release or just need to test a fix quickly, there are two options to test against the latest code on master.

Build locally

First option is to build Quarkus on your local system.

git clone https://github.com/quarkusio/quarkus.git cd quarkus ./mvnw clean install -Dquickly

Now, reference the version 999-SNAPSHOT in your gradle.properties:

quarkusPluginVersion=999-SNAPSHOT quarkusPlatformArtifactId=quarkus-bom quarkusPlatformVersion=999-SNAPSHOT quarkusPlatformGroupId=io.quarkus

This works because you should have this in your build.gradle:

repositories {

mavenLocal() // First look into local Maven repository under ~/.m2/repository

mavenCentral()

}Latest CI snapshots

Building Quarkus locally take a few minutes depending on your machine. Alternative is to use the latest snapshot that is published after each commit to master.

For this, you have to change your build.gradle to look into the snapshot repository:

repositories {

mavenLocal()

maven {

url "https://oss.sonatype.org/content/repositories/snapshots"

}

mavenCentral()

}You will have to do essentially the same in your settings.gradle because the repository for the Gradle plugin is resolved from here:

pluginManagement {

repositories {

mavenLocal()

// Added the snapshots repo here!

maven {

url "https://oss.sonatype.org/content/repositories/snapshots"

}

mavenCentral()

gradlePluginPortal()

}

plugins {

id 'io.quarkus' version "${quarkusPluginVersion}"

}

}Obviously, you will also have to make the change to your gradle.properties like above.

Gradle by default caches snaptshots for 24 hours. If you want to force Gradle to pull the latest snapshot, you can run the build like this:

./gradlew build --refresh-dependencies

Debugging Quarkus tests (from Gradle-based build)

11 April 2020

If you dive deeper into Quarkus and develop more serious applications it shows that Gradle is only the second consideration after Maven. But it is unfair to make that argument because Quarkus also states that the Gradle-integrations is only in Preview. Anyway, I sometimes struggle to find the correct configurations that work for Gradle.

One useful config to know is: How to enable remote-debugging for your @QuarkusTest and step through the test?

It seems, the Quarkus Gradle plugin collects jvmArgs from any existing Test task. That’s why you can enable the debugger like this:

test {

jvmArgs '-agentlib:jdwp=transport=dt_socket,server=y,suspend=y,address=5005'

}Quarkus - Understanding GraalVM native-image & Reflection on the example of XML-parsing

10 April 2020

A long time ago I wrote a post on how to build a native-image with GraalVM. Lately, I have been doing the same in the context of Quarkus. In this post I want describe what I have learned about native-image and reflection in the context of Quarkus; but not necessarily limited to Quarkus.

It started with me wanting to build a native application for a simple Quarkus application that uses a JDK API for XML processing. I.e. it uses code like this:

private boolean isValidXmlFile(Path p) {

try {

if (p == null) return false;

if (!p.toFile().exists()) return false;

SAXParserFactory factory = SAXParserFactory.newInstance();

factory.setValidating(false);

factory.setNamespaceAware(true);

SAXParser parser = factory.newSAXParser();

XMLReader reader = parser.getXMLReader();

reader.parse(new InputSource(new FileInputStream(p.toFile())));

return true;

}

catch (SAXParseException spe) {

return false;

}

catch (Exception e) {

logger.error(String.format("Error while determining if file (%s) is a valid XML-file.", p.getFileName().toString()), e);

return false;

}

}I tried to build a native image by executing ./gradlew nativeImage and got this error when runing the native application.

Exception in thread "main" javax.xml.parsers.FactoryConfigurationError: Provider com.sun.org.apache.xerces.internal.jaxp.SAXParserFactoryImpl not found

at javax.xml.parsers.FactoryFinder.newInstance(FactoryFinder.java:194)

at javax.xml.parsers.FactoryFinder.newInstance(FactoryFinder.java:147)

at javax.xml.parsers.FactoryFinder.find(FactoryFinder.java:271)

at javax.xml.parsers.SAXParserFactory.newInstance(SAXParserFactory.java:147)

at de.dplatz.bpmndiff.entity.Diff.isValidXmlFile(Diff.java:122)

at de.dplatz.bpmndiff.entity.Diff.determineIfSupported(Diff.java:113)

at de.dplatz.bpmndiff.entity.Diff.ofPaths(Diff.java:95)

at de.dplatz.bpmndiff.entity.Diff.ofPaths(Diff.java:73)

at de.dplatz.bpmndiff.control.Differ.diff(Differ.java:39)

at de.dplatz.bpmndiff.boundary.DiffResource.diff(DiffResource.java:31)

at de.dplatz.bpmndiff.boundary.DiffResource_ClientProxy.diff(DiffResource_ClientProxy.zig:51)

at de.dplatz.bpmndiff.UICommand.call(UICommand.java:65)

at de.dplatz.bpmndiff.UICommand.call(UICommand.java:27)

at picocli.CommandLine.executeUserObject(CommandLine.java:1783)

at picocli.CommandLine.access$900(CommandLine.java:145)

at picocli.CommandLine$RunLast.executeUserObjectOfLastSubcommandWithSameParent(CommandLine.java:2150)

at picocli.CommandLine$RunLast.handle(CommandLine.java:2144)

at picocli.CommandLine$RunLast.handle(CommandLine.java:2108)

at picocli.CommandLine$AbstractParseResultHandler.execute(CommandLine.java:1975)

at picocli.CommandLine.execute(CommandLine.java:1904)

at de.dplatz.bpmndiff.UICommand.run(UICommand.java:55)

at de.dplatz.bpmndiff.UICommand_ClientProxy.run(UICommand_ClientProxy.zig:72)

at io.quarkus.runtime.ApplicationLifecycleManager.run(ApplicationLifecycleManager.java:111)

at io.quarkus.runtime.Quarkus.run(Quarkus.java:61)

at io.quarkus.runtime.Quarkus.run(Quarkus.java:38)

at io.quarkus.runner.GeneratedMain.main(GeneratedMain.zig:30)

Caused by: java.lang.ClassNotFoundException: com.sun.org.apache.xerces.internal.jaxp.SAXParserFactoryImpl

at com.oracle.svm.core.hub.ClassForNameSupport.forName(ClassForNameSupport.java:60)

at java.lang.Class.forName(DynamicHub.java:1197)

at javax.xml.parsers.FactoryFinder.getProviderClass(FactoryFinder.java:119)

at javax.xml.parsers.FactoryFinder.newInstance(FactoryFinder.java:183)

... 25 more

If you have read my previous post, you already know that a JSON-file needs to be provided to native-image so reflection can be used on these classes during runtime of the native application.

Based on the error, I was able to construct a file reflect-config.json with this content:

[

{

"name": "com.sun.org.apache.xerces.internal.jaxp.SAXParserFactoryImpl",

"methods": [

{

"name": "<init>",

"parameterTypes": []

}

]

}

]

Where does this file have to be placed so native-image picks it up? For Quarkus, there are three options:

-

Place in

src/main/resourcesand reference via application.properties (see QUARKUS - TIPS FOR WRITING NATIVE APPLICATIONS) -

Place in

src/main/resourcesand reference via build.gradle (see QUARKUS - TIPS FOR WRITING NATIVE APPLICATIONS) -

Place in

src/main/resources/META-INF/native-imageand no further configuration is needed. It will be picked up automatically by convention.

For some reason, this third and simplest solution is not mentioned in the Quarkus guide; but maybe this is a new feature in GraalVM.

Resource Bundles

After having done this, I build the native image again and ran my application. When it tried to parse a non-XML-file I was getting this new error:

java.util.MissingResourceException: Could not load any resource bundle by com.sun.org.apache.xerces.internal.impl.msg.XMLMessages

at jdk.xml.internal.SecuritySupport.lambda$getResourceBundle$5(SecuritySupport.java:274)

at java.security.AccessController.doPrivileged(AccessController.java:81)

at jdk.xml.internal.SecuritySupport.getResourceBundle(SecuritySupport.java:267)

at com.sun.org.apache.xerces.internal.impl.msg.XMLMessageFormatter.formatMessage(XMLMessageFormatter.java:74)

at com.sun.org.apache.xerces.internal.impl.XMLErrorReporter.reportError(XMLErrorReporter.java:357)

at com.sun.org.apache.xerces.internal.impl.XMLErrorReporter.reportError(XMLErrorReporter.java:327)

at com.sun.org.apache.xerces.internal.impl.XMLScanner.reportFatalError(XMLScanner.java:1471)

at com.sun.org.apache.xerces.internal.impl.XMLDocumentScannerImpl$PrologDriver.next(XMLDocumentScannerImpl.java:1013)

at com.sun.org.apache.xerces.internal.impl.XMLDocumentScannerImpl.next(XMLDocumentScannerImpl.java:605)

at com.sun.org.apache.xerces.internal.impl.XMLNSDocumentScannerImpl.next(XMLNSDocumentScannerImpl.java:112)

at com.sun.org.apache.xerces.internal.impl.XMLDocumentFragmentScannerImpl.scanDocument(XMLDocumentFragmentScannerImpl.java:534)

at com.sun.org.apache.xerces.internal.parsers.XML11Configuration.parse(XML11Configuration.java:888)

at com.sun.org.apache.xerces.internal.parsers.XML11Configuration.parse(XML11Configuration.java:824)

at com.sun.org.apache.xerces.internal.parsers.XMLParser.parse(XMLParser.java:141)

at com.sun.org.apache.xerces.internal.parsers.AbstractSAXParser.parse(AbstractSAXParser.java:1216)

at com.sun.org.apache.xerces.internal.jaxp.SAXParserImpl$JAXPSAXParser.parse(SAXParserImpl.java:635)

at de.dplatz.bpmndiff.entity.Diff.isValidXmlFile(Diff.java:129)

at de.dplatz.bpmndiff.entity.Diff.determineIfSupported(Diff.java:113)

at de.dplatz.bpmndiff.entity.Diff.ofPaths(Diff.java:95)

at de.dplatz.bpmndiff.entity.Diff.ofPaths(Diff.java:73)

at de.dplatz.bpmndiff.control.Differ.diff(Differ.java:39)

at de.dplatz.bpmndiff.boundary.DiffResource.diff(DiffResource.java:31)

at de.dplatz.bpmndiff.boundary.DiffResource_ClientProxy.diff(DiffResource_ClientProxy.zig:51)

at de.dplatz.bpmndiff.UICommand.call(UICommand.java:65)

at de.dplatz.bpmndiff.UICommand.call(UICommand.java:27)

at picocli.CommandLine.executeUserObject(CommandLine.java:1783)

at picocli.CommandLine.access$900(CommandLine.java:145)

at picocli.CommandLine$RunLast.executeUserObjectOfLastSubcommandWithSameParent(CommandLine.java:2150)

at picocli.CommandLine$RunLast.handle(CommandLine.java:2144)

at picocli.CommandLine$RunLast.handle(CommandLine.java:2108)

at picocli.CommandLine$AbstractParseResultHandler.execute(CommandLine.java:1975)

at picocli.CommandLine.execute(CommandLine.java:1904)

at de.dplatz.bpmndiff.UICommand.run(UICommand.java:55)

at de.dplatz.bpmndiff.UICommand_ClientProxy.run(UICommand_ClientProxy.zig:72)

at io.quarkus.runtime.ApplicationLifecycleManager.run(ApplicationLifecycleManager.java:111)

at io.quarkus.runtime.Quarkus.run(Quarkus.java:61)

at io.quarkus.runtime.Quarkus.run(Quarkus.java:38)

at io.quarkus.runner.GeneratedMain.main(GeneratedMain.zig:30)

So, it seems not only reflection needs to be configured for native-image builds, but also resources and resource-bundles (e.g. localized error message). I solved this by placing a resource-config.json in the same folder:

{

"resources": [],

"bundles": [

{"name":"com.sun.org.apache.xerces.internal.impl.msg.XMLMessages"}

]

}

After this, my native application was working succesfully.

There are two things to note here:

-

Normally, this kind of configuration should not be needed for JDK-internal classes and APIs like the SAXParser. Unfortunately, there is a pending issue about the

java.xmlmodule: https://github.com/oracle/graal/issues/1387. -

Adding the

com.sun.org.apache.xerces.internal.impl.msg.XMLMessagesresource-bundle should also not be necessary. But even if it would be working, there is still an issue that only the default locale is added to the native application; other locales would need to be added via the mechansim I have described (e.g.com.sun.org.apache.xerces.internal.impl.msg.XMLMessages_defor german messages). See the issue for details: https://github.com/oracle/graal/issues/911.

Automatically generating config files.

What I have done up to now is write the files manually. Is there a simpler way? Well, I don’t really have much experience yet with generating these files but it can be done:

GraalVM comes with an agent that can be used to trace all the reflective access when running your application in normal JVM-mode.

java -agentlib:native-image-agent=trace-output=/home/daniel/junk/trace.json -jar my-app.jar

This will generate a trace of all reflective access and you can use it as help to generate your configuration manually.

Even simpler, the agent can be used to create the files that you can place under src/main/resources/META-INF/native-image:

java -agentlib:native-image-agent=experimental-class-loader-support,config-output-dir=../src/main/resources/META-INF/native-image/ -jar my-app.jar

Would this have helped us with the SAXParser problem from above? Unfortunately not. At least not currently, because the agent specifically will not generate configuration for relective access of JDK-internal classes; it is only meant for libraries external to the JDK. Why? Because normally, it is assumed that all JDK internals are handled without any configuration needed. Unfortnunately, we have seen that this is currently not the case for the jaxa.xml module.

Using JDK Preview Features from a Gradle-based Eclipse-project

01 March 2020

This post is about working with preview features of Java (e.g. JDK 13’s switch expressions preview) from your Gradle project within Eclipse IDE with zero manual configuration. On top of that, my project uses Quarkus which only makes a minimal difference as we have to consider the dev-mode as well.

If you are working with javac and java on the command-line only, it is very simple in general: You have to pass --enable-preview as an argument to javac and java.

In your build.gradle file you can do it like this:

// Enable for Java Compiler on src/main/java

compileJava {

options.compilerArgs += ["--enable-preview"]

}

// Enable for Java Compiler on src/test/java

compileTestJava {

options.compilerArgs += ["--enable-preview"]

}

// Enable for running tests

test {

jvmArgs '--enable-preview'

}

// Enable for Quarkus DevMode runner which was my main use-case

quarkusDev {

jvmArgs '--enable-preview'

}If you use preview features in your source-code, running a gradlew build on the commandline should now compile your code.

You can run the built JAR with java --enable-preview -jar app.jar.

In case you want to run your application from Gradle, you will have to configure the JVM args for this as well in your build.gradle; See the JavaExec task.

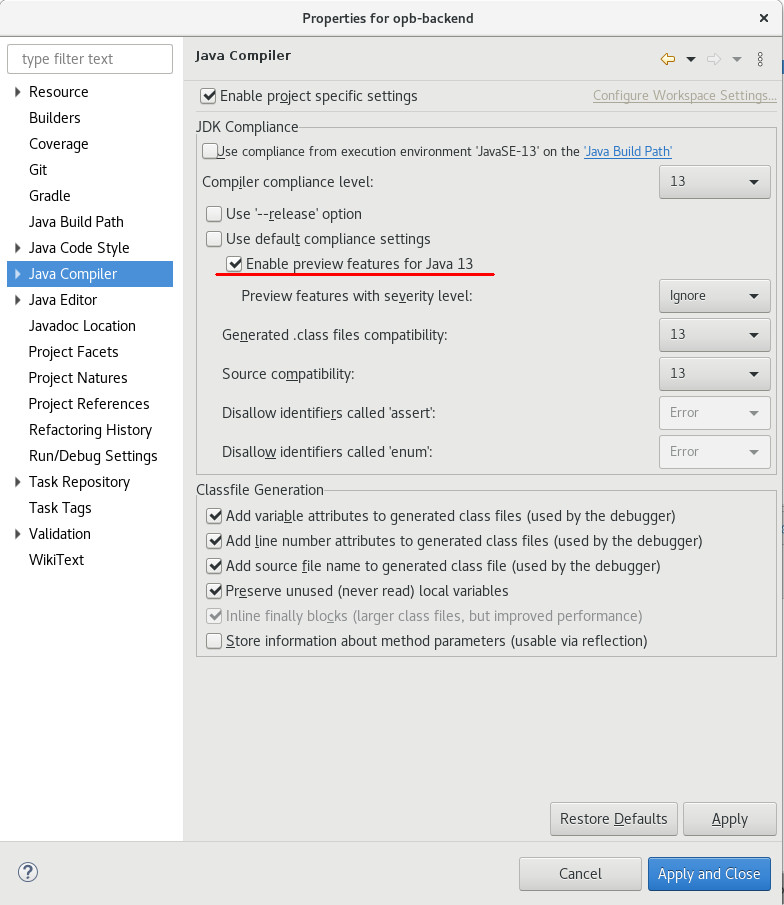

Unfortunately, Eclipse will not automatically infer the right settings for the Eclipse compiler and will show compile errors in your IDE. The quick fix is to manually enable the preview feature in the Java Compiler project-settings (right-click on the project; Properties > Java Compiler; check 'Enable preview features for Java 13'), but I would prefer that there are no manual steps needed. I.e. a team member should be able to clone a Git repo, import it into Eclipse and all should be set up automatically.

On our way of achieving this, you first have to add this to our build.gradle:

// Add plugin at top of your build.gradle

apply plugin: 'eclipse'

// ...

//Buildship doesn't use that hooks (https://discuss.gradle.org/t/when-does-buildship-eclipse-customization-run/20781/2)

//you need to run `gradle eclipseJdt` separately

eclipse.jdt.file.withProperties { props ->

props['org.eclipse.jdt.core.compiler.problem.enablePreviewFeatures']= 'enabled'

props['org.eclipse.jdt.core.compiler.problem.reportPreviewFeatures']= 'ignore'

}It found it in this Eclipse JDT Github issue.

When you now run the Gradle-task eclipseJdt you can do a Refresh of your Gradle project in Eclipse afterwards and you should see that the Java Compiler settings in Eclipse also have been properly set.

The ultimate goal is that we don’t have to run this gradle-task manually. To achieve this, we can leverage another quiet new Buildship feature that triggers a gradle task whenever a project is imported into Eclipse or the project is refreshed. You can read about it here.

eclipse {

synchronizationTasks eclipseJdt

}This is the last missing piece. Once you import the project into Eclipse, this task will automatically trigger and configure the Eclipse compiler. No manual steps or instructions you have to give to your team mates how to get the project imported properly.

I was expecting this task to also be triggered when you run "Refresh Gradle Project" for an already imported project, but this did not work for me yet. Instead, I had to delete the project from Eclipse and import it again. I still have to find out why.

Improving on Integration-Testing (with Arquillian)

04 September 2019

Introduction

Arquillian is a testing-framework for Jakarta EE applications (formerly Java EE).

System-tests are run as a remote-client invoking a boundary-component of the tested application; e.g. an exposed JAX-RS/REST-endpoint.

Integration-tests are run within the container; allowing to test internal components of the application; e.g. you can inject an EJB or CDI-bean into your test and invoke a method.

Both types of tests have advantages and disadvantages where I find that the disadvantages of Integration-tests often outweight the benefits (in my projects).

|

Note

|

You can find a good introduction on different testing-techniques and their advantages/disadvantages in this article series by Sebastian Daschner. |

Let me explain: The Jakarta EE applications that I am involved with are usually large, business-focused applications. This means, that I am rarely interested in testing the framework or the container. I am interested in testing how the application behaves in the correct way from a business-perspective. This can often be done quiet nice by calling external REST endpoints. My development-cycle involves a deployed/running application that allows me to hot-swap small code-changes (e.g. via Java’s remote-debugging API) and then invoke the system-test again to see if I get the expected result. Rinse and repeat.

Integration-tests on the other-hand don’t allow me the quick feedback cycle I get from system-tests. As the tests themselfs run in the server/application (and thus are deployed as part of the main WAR/EAR), I have to deploy a whole WAR/EAR to the app-server, run the tests and shut down the container again. If i make a change to the application-code or test, I have to repeat this rather long cycle where I do a full deployment.

The cycle is especially long when the application is not very modular/loosely coupled. Arquillian theoretically allwows me to build small test-deployments with Shrinkwrap but depending on the application the test-archive often has same magnitude as the whole application. So, deployment and thus testing is slow.

What I somtimes would like to have is the quick feedback-loop I get with system-tests but beeing able to test internals of the application that are not exposed via a Rest-endpoint.

How can we get integration-tests that behave more like system-tests? How can we get system-tests that allow us to call internal components of the application?

WarpUnit

Meet WarpUnit. I have been reading about it some time ago and found the idea quiet nice. It is a small testing-solution which allows you to run a system-test but be able to have snippets of code (lambda expressions) that are invoked within the container on server-side. Actually, the approach even allows injection of server-components similar to Arquillian’s integration-tests. Have a look at this very neat concept.

public class GreeterGrayBoxTest {

@Inject

Greeter greeter;

@Test

public void testGreeter() {

System.out.println("This is printed in the JUnit test output");

WarpGate gate = WarpUnit.builder()

.primaryClass(GreeterGrayBoxTest.class)

.createGate();

String greetingForBob = gate.warp(() -> {

System.out.println("This is printed in the server log");

return greeter.greet("Bob");

});

Assert.assertEquals("Greetings, Bob !",greetingForBob);

}

}What happens here is that the gate.warp()-call will take the bytecode of our GreeterGrayBoxTest class, upload it to the server, load it via a custom class-loader and invoke the lambda within the server.

Even though the repo did not see a commit for a long time, the solution works when you use it with a recent Wildfly or Liberty. (Actually, the maintainers invited me to contribute and I made a small pull-request to fix the build; a jboss/redhat maven repo URL had changed.)

|

Note

|

Just found out about Arquillian Warp which seems to follow a similar approach. |

Taking it to the next Level

What I would like to have as a final solution is something that can transparently run as an Arquillian integration-test but can also be invoked like a WarpUnit-style test from outside the application-server.

You can find my proof-of-concept solution on GitHub.

@RunWith(Warp.class)

public class ArquillianStyleIntegrationTest {

@Inject

Greeter greeter;

@Test

public void testGreeter() {

System.out.println("This is printed in the server log");

String result = greeter.greet("Bob");

assertThat(result, is("Greetings, Bob !"));

}

}Here, the whole testGreeter method is run within the application-server instead of just running some code-snippets in the server. This is a great approach while doing development because I can make quick-changes in my test-code and rerun the test. When I am done, the approach allows me to just swtich the annotation from @RunWith(Warp.class) to @RunWith(Arquillian.class) and I am able to run it as a regular arquillian integration-tests.

Obviously, it would be nice to have a deeper arquillian integration that does not require me to change the annotation for this. Instead, it should be transparently handled by an arquillian extension. But this is for the future; after seeing if this approach works in real-world projects.

Javascript Router-friendly packaging as WAR-archive

26 August 2019

Routers in modern Javascript framworks usually support path’s similar to a Restful API. I.e. when the main page is localhost:8080 and shows the landing page; then localhost:8080/products/1 might show the page with the details for Product #1.

For a single-page application an in-app link to localhost:8080/products/1 should not trigger a reload of the whole application but should be handled within the app. This is the main job of the router.

A lot of Javascript frameworks support this routing based on the browser’s history API. Vaadin Router is just one example. Similar routers exist in Angular and friends.

For this to work, the web-server needs to serve the localhost:8080/index.html for any of these sub-resources/pages. This is because the Router in the Javascript code will deconstruct the URL and show the right page-fragments.

How can this be achived in a JavaEE environment where your front-end Javascript application is packaged inside a WAR-file?

Simple. Just use this web.xml:

<web-app xmlns="http://xmlns.jcp.org/xml/ns/javaee"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://xmlns.jcp.org/xml/ns/javaee

http://xmlns.jcp.org/xml/ns/javaee/web-app_3_1.xsd"

version="3.1">

<welcome-file-list>

<welcome-file>index.html</welcome-file>

</welcome-file-list>

<error-page>

<error-code>404</error-code>

<location>/index.html</location>

</error-page>

</web-app>A programatic solution is to use a servlet-filter that always routes to the index.html. Here is from the post on Quarkus and Web UI Development.

JavaEE App Deployment on Openshift/Minishift

23 June 2019

I have been watching this free course by RedHat to get started on OpenShift. This post contains my personal notes on the most important commands and concepts for later reference.

Getting started with MiniShift

I already wanted to do the course a few month back on my laptop running CentOS linux; but for some reason I ran into problems installing MiniShift. After reinstalling my laptop with Debian, I gave it another go. There have been a few small problems that cost me some time along the way and I will describe them as well

After installing Minishift (which is a local OpenShift cluster running in a VM), the intial steps are simple:

minishift start // starts the cluster eval $(minishift oc-env) // to connect the oc commandline-tool from OpenShift to the local cluster oc login -u developer // log into the OpenShift cluster via the oc commandline-tool; password can be anything non-empty

Essentially OpenShift runs your applications in Kubernetes (MiniShift uses minikube) and Docker; so this is what minishift start will boot up in a VM. Read more about it here.

You can open the OpenShift web-console with minishift console and log in with user developer and any non-empty password. We can use it later to inspect the deployed applications and see the logs of the running containers; even connecting to a shell within the container can be done via the web console.

This is also a good place to introduce the concept of projects in OpenShift. Actually, there is also the concept of projects in Minishift, but with minishift start a default project named minishift is created and I usually get along with this single project quiet good.

For the OpenShift project this is different. You should use a single project for deploying all your modules/microservices that make up your application. So, if you are working on different customer-projects, it would be natural to also define different projects in OpenShift for it.

Here, I will be working with a project named junk. It is created and activated via

oc new-project junk

This is important later on, because Docker images we build need to be tagged with the project-name for OpenShift beeing able to use them.

Also, note that once you stop and start MiniShift, the default OpenShift project might be active (check with oc projects) and you will have to run oc project junk to activate junk; otherwise it might happen that oc commands interacte with the wrong project.

Building and deploy from source via S2I and templates

The most prominent approach for deploying your application on OpenShift is via Source-2-Image. What this means is that effectively your application is built from sources (Maven, Gradle, …) within a Docker container. The resulting artifact (e.g. WAR-file) is then put in another Docker container (e.g. Wildfly) to start the application.

Additionally, there is the concept of templates. These templates and their parameters are documented in a good way so that you basically only have the point the template to a Git Repo URL containing a Maven build. The template will do the job of building and deploying the artifact.

Minishift does not come with templates for JBoss/Wildfly preinstalled. But you can easily add a JBoss EAP 7 template by running

oc replace --force -f https://raw.githubusercontent.com/jboss-openshift/application-templates/master/eap/eap71-basic-s2i.json

You can inspect the template parameters with

oc describe template eap71-basic-s2i

Lets launch a simple Maven-based JavaEE project via the JBoss EAP 7 template:

oc new-app --template=eap71-basic-s2i -p SOURCE_REPOSITORY_URL=https://github.com/AdamBien/microservices-on-openshift.git -p CONTEXT_DIR=micro -p SOURCE_REPOSITORY_REF=master --name=micro

This approach works quiet nicely, but as you would normally build your application on a Jenkins or similar build-server, the approach seems not so useful for serious projects.

Deploy via Image Streams

From now on we assume the JavaEE WAR/EAR was built via Gradle/Maven on Jenkins and we only want to use OpenShift to deploy it.

For this we can use the concept of Image Streams. Essentially, it is just another abstraction on top of Docker.

As tags like latest (or even specific versions) can be overwritten in a Docker registry, Image Streams give Docker images a handle that can be used today or tomorrow even when the version was overwritten.

To be concrete: You deploy your application on a docker image appserver:latest, the Image Stream in OpenShift will make sure to always take the same Docker image for deployment even when containers are built after latest already points to a new image. The handle will only be updated when you proactively decide so. This allows reproduceable build/deployments and removes the level of suprise when a new deployment is pushed to production on a Friday afternoon.

To demonstrate the steps, I will be using the demo repo from the course but please note that it could be any other Maven/Gradle-based project that produces a JavaEE WAR/EAR-file.

git clone https://github.com/AdamBien/microservices-on-openshift.git cd microservices-on-openshift/micro mvn package

This should have produced a micro.war under the microservices-on-openshift/micro/target folder.

Lets first check what Image Streams OpenShift knows about (you can also reference images from DockerHub or your local docker registry but more on that later):

oc get is -n openshift

Let’s define an application using the wildfly image-stream.

oc new-app wildfly:latest~/tmp --name=micro

The trick used by Adam here is to give /tmp or some other empty folder to the command because we don’t want OpenShift to build our application. Normally, you would give the path to a Git Repo or a folder containing a pom.xml. In this case, OpenShift would do the build from source again.

Instead, we use the oc start-build command and give the already built artifact:

oc start-build micro --from-file=target/micro.war

To expose the application to the outside world via a load-balancer, run

oc expose svc micro

In the web-console you should be able to go to your project and under it to Applications/Routes. Here you will find a link to access you applications HTTP port.

The URL to access the Rest endpoint should look similar to this: http://micro-junk.192.168.42.3.nip.io/micro/resources/ping.

DNS issues

A problem that bugged me for some time was the concept of the nip.io domain and that DNS servers should resolve it to the IP given as subdomain.

It would not have been a problem if my system was set up to use e.g. the Google DNS servers. Instead, on my Debian/local network, there is some local DNS server and it was not able to resolve the nip.io domain.

To make it work, I had to set up the Google DNS servers on my system. Namely, 8.8.8.8 and 8.8.4.4. After this, I was able to call the Rest endpoint.

Local DNS

For some time I also played around with a local DNS server coming as an experimental feature, but I moved away from it again because it was not really necessary. Anyway, below are the steps if you want to try it:

export MINISHIFT_ENABLE_EXPERIMENTAL=y minishift start minishift dns start patch /etc/resolv.conf

Deleting resources

As you are playing around in OpenShift, it is often useful to start from scratch again. Actually, we should do it to demonstrate a different approach to deploy our application.

All resources in OpenShift are labeled with the application-name (oc get all -l app=micro). So, in our case, we can delete our application and all its resources by running

oc delete all -l app=micro

Image Stream from own Docker image

I assume you have run the oc delete command because we now want to deploy our micro application again, but in a different way: deployed in a Docker container that we have built ourselfs.

I.e. we want to use our own Docker images within OpenShift’s concept of Image Streams.

First, we need to connect our Docker client to the Docker runtime in MiniShift:

eval $(minishift docker-env)

Try docker ps now and you should see all the Docker containers running in your OpenShift environment.

We can now do a docker build as usual; we just have to make sure to tag it correctly.

As OpenShift exposes a Docker registry, we need to tag the image for this registry (we can get it from minishift openshift registry); and additionally, there is the convention that the image-name need to include the name of the OpenShift project and the application-name. So, the full build-command looks liḱe this:

docker build -t $(minishift openshift registry)/junk/micro . docker login -u developer -p $(oc whoami -t) $(minishift openshift registry) docker push $(minishift openshift registry)/junk/micro oc new-app --image-stream=micro oc expose svc micro oc status

Important concepts

Below are some more important concepts for deploying applications to the cloud and the respective commands.

Scale

You can scale the number of replicas/containers with below command:

oc scale --replicas=2 dc ping oc get all

As OpenShift exposes your service via a load-balancer, this is completely transparent and you might be routed to any of the started containers.

Configuration

In Java you can access environment variables via System.getenv.

This is a standard mechanism to configure you application in cloud-native applications.

Below is the command to set such an environment variable for your service.

oc set env dc/ping --list oc set env dc/ping message='Hello World'

What will happen, is that OpenShift restarts all containers and places the new config in the environment.

You application will now get Hello World when invoking System.getenv("message").

Health check

Every application should define some external health-check endpoint. This allows external tools or e.g. OpenShift to monitor the state of the application. For this, Kubernetes defines two different health-checks. Readyness probes to test if the application is ready/started; and liveness probes to test if the application is still alive and responding. Below are the commands to set each. You Rest-service simply needs to respond with HTTP responce-code 200 is everything is fine; 500 in case to indicate the opposite.

oc set probe dc/ping --liveness --get-url=http://:8080/ping/resources/health oc set probe dc/ping --readiness --get-url=http://:8080/ping/resources/health